eJournal: uffmm.org,

ISSN 2567-6458, 13.February 2019

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

Last corrections: 14.February 2019 (add some more keywords; added emphasizes for central words)

Change: 5.May 2019 (adding the the aspect of simulation and gaming; extending the view of the driving actors)

CONTEXT

An overview to the enhanced AAI theory version 2 you can find here. In this post we talk about the blueprint of the whole AAI analysis process. Here I leave out the topic of actor models (AM); the aspect of simulation and gaming is mentioned only shortly. For these topics see other posts.

THE AAI ANALYSIS BLUEPRINT

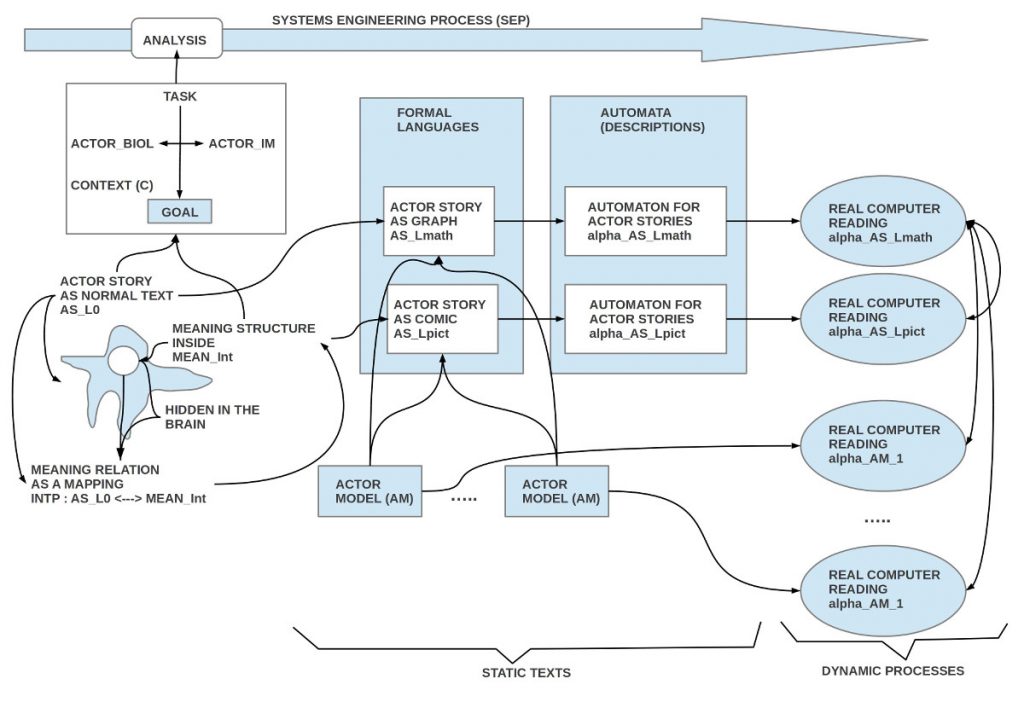

The Actor-Actor Interaction (AAI) analysis is understood here as part of an embracing systems engineering process (SEP), which starts with the statement of a problem (P) which includes a vision (V) of an improved alternative situation. It has then to be analyzed how such a new improved situation S+ looks like; how one can realize certain tasks (T) in an improved way.

DRIVING ACTORS

The driving actors for such an AAI analysis are at least one stakeholder (STH) which communicates a problem P and an envisioned solution (ES) to an expert (EXPaai) with a sufficient AAI experience. This expert will take the lead in the process of transforming the problem and the envisioned solution into a working solution (WS).

In the classical industrial case the stakeholder can be a group of managers from some company and the expert is also represented by a whole team of experts from different disciplines, including the AAI perspective as leading perspective.

In another case which I will call here the communal case — e.g. a whole city — the stakeholder as well as the experts are members of the communal entity. As in the before mentioned cases there is some commonly accepted problem P combined with a first envisioned solution ES, which shall be analyzed: what is needed to make it working? Can it work at all? What are costs? And many other questions can arise. The challenge to include all relevant experience and knowledge from all participants is at the center of the communication and to transform this available knowledge into some working solution which satisfies all stated requirements for all participants is a central condition for the success of the project.

EPISTEMOLOGY

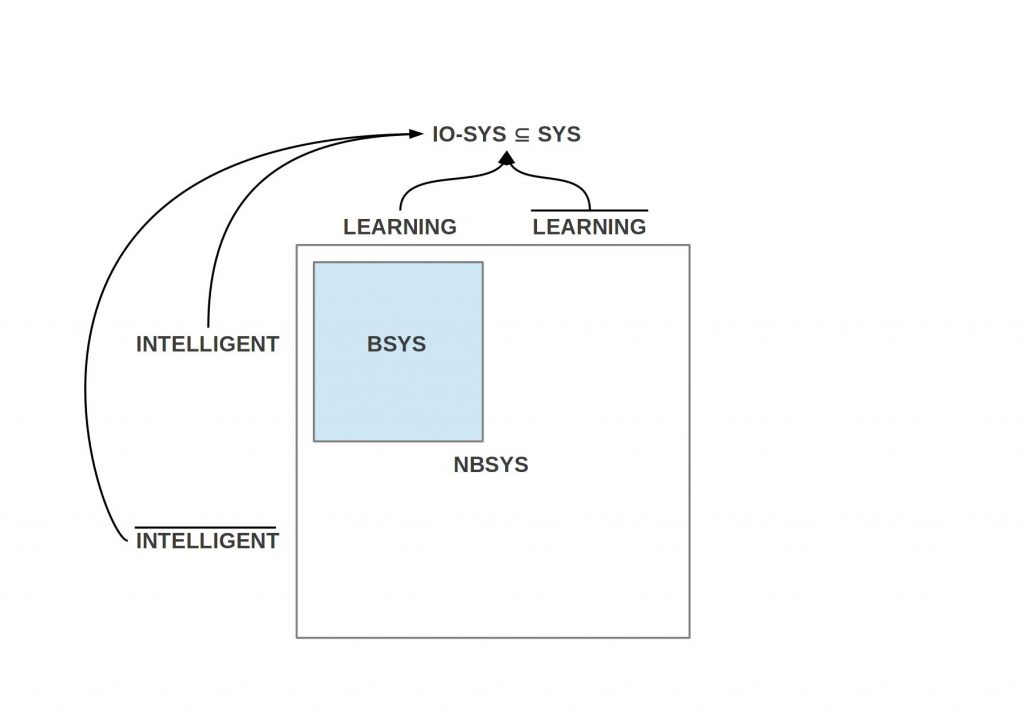

It has to be taken into account that the driving actors are able to do this job because they have in their bodies brains (BRs) which in turn include some consciousness (CNS). The processes and states beyond the consciousness are here called ‘unconscious‘ and the set of all these unconscious processes is called ‘the Unconsciousness’ (UCNS).

For more details to the cognitive processes see the post to the philosophical framework as well as the post bottom-up process. Both posts shall be integrated into one coherent view in the future.

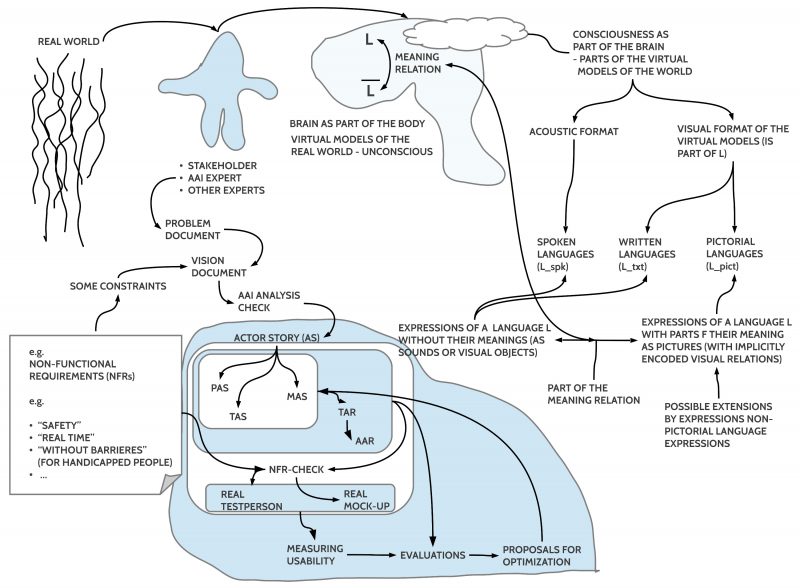

SEMIOTIC SUBSYSTEM

An important set of substructures of the unconsciousness are those which enable symbolic language systems with so-called expressions (L) on one side and so-called non-expressions (~L) on the other. Embedded in a meaning relation (MNR) does the set of non-expressions ~L function as the meaning (MEAN) of the expressions L, written as a mapping MNR: L <—> ~L. Depending from the involved sensors the expressions L can occur either as acoustic events L_spk, or as visual patterns written L_txt or visual patterns as pictures L_pict or even in other formats, which will not discussed here. The non-expressions can occur in every format which the brain can handle.

While written (symbolic) expressions L are only associated with the intended meaning through encoded mappings in the brain, the spoken expressions L_spk as well as the pictorial ones L_pict can show some similarities with the intended meaning. Within acoustic expressions one can ‘imitate‘ some sounds which are part of a meaning; even more can the pictorial expressions ‘imitate‘ the visual experience of the intended meaning to a high degree, but clearly not every kind of meaning.

DEFINING THE MAIN POINT OF REFERENCE

Because the space of possible problems and visions it nearly infinite large one has to define for a certain process the problem of the actual process together with the vision of a ‘better state of the affairs’. This is realized by a description of he problem in a problem document D_p as well as in a vision statement D_v. Because usually a vision is not without a given context one has to add all the constraints (C) which have to be taken into account for the possible solution. Examples of constraints are ‘non-functional requirements’ (NFRs) like “safety” or “real time” or “without barriers” (for handicapped people). Part of the non-functional requirements are also definitions of win-lose states as part of a game.

AAI ANALYSIS – BASIC PROCEDURE

If the AAI check has been successful and there is at least one task T to be done in an assumed environment ENV and there are at least one executing actor A_exec in this task as well as an assisting actor A_ass then the AAI analysis can start.

ACTOR STORY (AS)

The main task is to elaborate a complete description of a process which includes a start state S* and a goal state S+, where the participating executive actors A_exec can reach the goal state S+ by doing some actions. While the imagined process p_v is a virtual (= cognitive/ mental) model of an intended real process p_e, this intended virtual model p_e can only be communicated by a symbolic expressions L embedded in a meaning relation. Thus the elaboration/ construction of the intended process will be realized by using appropriate expressions L embedded in a meaning relation. This can be understood as a basic mapping of sensor based perceptions of the supposed real world into some abstract virtual structures automatically (unconsciously) computed by the brain. A special kind of this mapping is the case of measurement.

In this text especially three types of symbolic expressions L will be used: (i) pictorial expressions L_pict, (ii) textual expressions of a natural language L_txt, and (iii) textual expressions of a mathematical language L_math. The meaning part of these symbolic expressions as well as the expressions itself will be called here an actor story (AS) with the different modes pictorial AS (PAS), textual AS (TAS), as well as mathematical AS (MAS).

The basic elements of an actor story (AS) are states which represent sets of facts. A fact is an expression of some defined language L which can be decided as being true in a real situation or not (the past and the future are special cases for such truth clarifications). Facts can be identified as actors which can act by their own. The transformation from one state to a follow up state has to be described with sets of change rules. The combination of states and change rules defines mathematically a directed graph (G).

Based on such a graph it is possible to derive an automaton (A) which can be used as a simulator. A simulator allows simulations. A concrete simulation takes a start state S0 as the actual state S* and computes with the aid of the change rules one follow up state S1. This follow up state becomes then the new actual state S*. Thus the simulation constitutes a continuous process which generally can be infinite. To make the simulation finite one has to define some stop criteria (C*). A simulation can be passive without any interruption or interactive. The interactive mode allows different external actors to select certain real values for the available variables of the actual state.

If in the problem definition certain win-lose states have been defined then one can turn an interactive simulation into a game where the external actors can try to manipulate the process in a way as to reach one of the defined win-states. As soon as someone (which can be a team) has reached a win-state the responsible actor (or team) has won. Such games can be repeated to allow accumulation of wins (or loses).

Gaming allows a far better experience of the advantages or disadvantages of some actor story as a rather lose simulation. Therefore the probability to detect aspects of an actor story with their given constraints is by gaming quite high and increases the probability to improve the whole concept.

Based on an actor story with a simulator it is possible to increase the cognitive power of exploring the future even more. There exists the possibility to define an oracle algorithm as well as different kinds of intelligent algorithms to support the human actor further. This has to be described in other posts.

TAR AND AAR

If the actor story is completed (in a certain version v_i) then one can extract from the story the input-output profiles of every participating actor. This list represents the task-induced actor requirements (TAR). If one is looking for concrete real persons for doing the job of an executing actor the TAR can be used as a benchmark for assessing candidates for this job. The profiles of the real persons are called here actor-actor induced requirements (AAR), that is the real profile compared with the ideal profile of the TAR. If the ‘distance’ between AAR and TAR is below some threshold then the candidate has either to be rejected or one can offer some training to improve his AAR; the other option is to change the conditions of the TAR in a way that the TAR is more closer to the AARs.

The TAR is valid for the executive actors as well as for the assisting actors A_ass.

CONSTRAINTS CHECK

If the actor story has in some version V_i a certain completion one has to check whether the different constraints which accompany the vision document are satisfied through the story: AS_vi |- C.

Such an evaluation is only possible if the constraints can be interpreted with regard to the actor story AS in version vi in a way, that the constraints can be decided.

For many constraints it can happen that the constraints can not or not completely be decided on the level of the actor story but only in a later phase of the systems engineering process, when the actor story will be implemented in software and hardware.

MEASURING OF USABILITY

Using the actor story as a benchmark one can test the quality of the usability of the whole process by doing usability tests.